System Design URL Shortening Service

URL shortening services like bit.ly or TinyURL are very popular to generate shorter aliases for long URLs. You need to design this kind of web service where if a user gives a long URL then the service returns a short URL and if the user gives a short URL then it returns the original long URL.

For example, shortening the given URL through TinyURL:

https://www.interviewready.org/get-your-dream-job-with/?ref=leftbar-rightbarWe get the result given - https://tinyurl.com/y7vg2xjl

Before you jump into the solution always clarify all the assumptions you’re making at the beginning of the interview. Ask questions to identify the scope of the system. This will clear the initial doubt, and you will get to know what are the specific detail interviewer wants to consider in this service.

- Given a long URL, the service should generate a shorter and unique alias of it.

- When the user hits a short link, the service should redirect to the original link.

- Links will expire after a standard default time span.

- The system should be highly available. This is really important to consider because if the service goes down, all the URL redirection will start failing.

- URL redirection should happen in real-time with minimal latency.

- Shortened links should not be predictable.

Let’s start by making some assumptions about the traffic (for scalability) and the length of the URL.

Traffic

Let’s assume our service has 30M new URL shortenings per month. Let’s assume we store every URL shortening request (and associated shortened link) for 5 years. For this period the service will generate about 1.8 B records.

30 million * 5 years * 12 months = 1.8B

URL Length

Let’s consider we are using 7 characters to generate a short URL. These characters are a combination of 62 characters [A-Z, a-z, 0-9] something like http://ad.com/abXdef2.

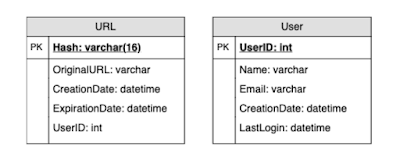

Data Capacity Modeling

Discuss the data capacity model to estimate the storage of the system. We need to understand how much data we might have to insert in our system. Think about the different columns or attributes that will be stored in our database and calculate the storage of data for five years. Let’s make the assumption given below for different attributes…

- Consider average long URL size of 2KB ie for 2048 characters.

- Short URL size: 17 Bytes for 17 character

- created_at- 7 bytes

- expiration_length_in_minutes -7 bytes

The above calculation will give a total of 2.031KB per shortened URL entry in the database. If we calculate the total storage then for 30 M active users total size = 30000000 * 2.031 = 60780000 KB = 60.78 GB per month. In a Year of 0.7284 TB and in 5 years 3.642 TB of data.

We need to think about the reads and writes that will happen on our system for this amount of data. This will decide what kind of database (RDBMS or NoSQL) we need to use.

URL Shortening Logic (Encoding)

To convert a long URL into a unique short URL we can use some hashing techniques like Base62 or MD5. We will discuss both approaches.

Base62 Encoding: Base62 encoder allows us to use the combination of characters and numbers which contains A-Z, a-z, 0–9 total( 26 + 26 + 10 = 62). So for 7 characters short URL, we can serve 62^7 ~= 3500 billion URLs which is quite enough in comparison to base10 (base10 only contains numbers 0-9 so you will get only 10M combinations). If we use base62 making the assumption that the service is generating 1000 tiny URLs/sec then it will take 110 years to exhaust this 3500 billion combination. We can generate a random number for the given long URL and convert it to base62 and use the hash as a short URL id.

MD5 Encoding: MD5 also gives base62 output but the MD5 hash gives a lengthy output which is more than 7 characters. MD5 hash generates 128-bit long output so out of 128 bit we will take 43 bit to generate a tiny URL of 7 characters. MD5 can create a lot of collisions. For two or many different long URL inputs we may get the same unique id for short URL and that could cause data corruption. So we need to perform some checks to ensure that this unique id doesn’t exist in the database already.

Database

We can use RDBMS which uses ACID properties but you will be facing the scalability issue with relational databases. Now if you think you can use sharding and resolve the scalability issue in RDBMS then that will increase the complexity of the system. There are 30M active users so there will be conversions and a lot of Short URL resolution and redirections. Read and write will be heavy for these 30M users so scaling the RDBMS using shard will increase the complexity of the design when we want to have our system in a distributed manner. You may have to use consistent hashing to balance the traffics and DB queries in the case of RDBMS and which is a complicated process. So to handle this amount of huge traffic on our system relational databases are not fit and also it won’t be a good decision to scale the RDBMS.

Now let’s talk about NoSQL!

The only problem with using the NoSQL database is its eventual consistency. We write something and it takes some time to replicate to a different node but our system needs high availability and NoSQL is fits this requirement. NoSQL can easily handle the 30M of active users and it is easy to scale. We just need to keep adding the nodes when we want to expand the storage.

Technique 1

Let’s discuss the mapping of a long URL into a short URL in our database. Assume we generate the Tiny URL using base62 encoding then we need to perform the steps give below…

- The tiny URL should be unique so firstly check the existence of this tiny URL in the database (doing get(tiny) on DB). If it’s already present there for some other long URL then generate a new short URL.

- If the short URL isn’t present in DB then put longURL and TinyURL in DB (put(TinyURL, longURL)).

This technique works with one server very well but if there will be multiple servers then this technique will create a race condition. When multiple servers will work together, there will be a possibility that they all can generate the same unique id or same tiny URL for different long URLs, and even after checking the database, they will be allowed to insert the same tiny URLs simultaneously (which is the same for different long URLs) in the database and this may end up corrupting the data.

We can use putIfAbsent(TinyURL, long URL) or INSERT-IF-NOT-EXIST condition while inserting the tiny URL but this requires support from DB which is available in RDBMS but not in NoSQL. Data is eventual consistent in NoSQL so putIfAbsent feature support might not be available in the NoSQL database.

Technique 2 (MD5 Approach)

Encode the long URL using the MD5 approach and take only the first 7 chars to generate TinyURL.The first 7 characters could be the same for different long URLs so check the DB (as we have discussed in technique 1) to verify that TinyURL is not used already

MD5 saves some space in the database for the same URLs but for two long different URLs again we will face the same problem as we have discussed in technique 1. We can use putIfAbsent but NoSQL doesn’t support this feature. So let’s move to the third technique to solve this problem.

Technique 3 (Counter Approach)

Using a counter is a good decision for a scalable solution because counters always get incremented so we can get a new value for every new request.

Single server approach:

- A single host or server (say database) will be responsible for maintaining the counter.

- When the worker host receives a request it talks to the counter host, which returns a unique number and increments the counter. When the next request comes the counter host again returns the unique number and this goes on.

- Every worker host gets a unique number which is used to generate TinyURL.

Problem: If the counter host goes down for some time then it will create a problem, also if the number of requests will be high then the counter host might not be able to handle the load. So challenges are a Single point of failure and a single point of bottleneck.

And what if there are multiple servers?

You can’t maintain a single counter and returns the output to all the servers. To solve this problem we can use multiple internal counters for multiple servers which use different counter range. For example server 1 ranges from 1 to 1M, server 2 ranges from 1M to 10M, and so on. But again we will face a problem i.e. if one of the counters goes down then for another server it will be difficult to get the range of the failure counter and maintain it again. Also if one counter reaches its maximum limit then resetting the counter will be difficult because there is no single host available for coordination among all these multiple servers. The architecture will be messed up since we don’t know which server is master or which one is a slave and which one is responsible for coordination and synchronization.

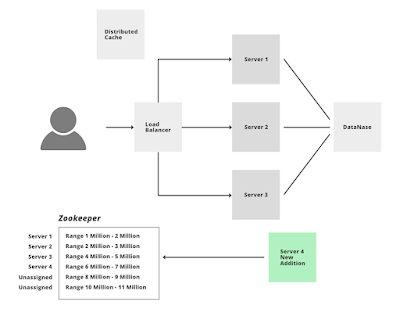

Solution: To solve this problem we can use a distributed service Zookeeper to manage all these tedious task and to solve the various challenges of a distributed system like a race condition, deadlock, or particle failure of data. Zookeeper is basically a distributed coordination service that manages a large set of hosts. It keeps track of all the things such as the naming of the servers, active servers, dead servers, configuration information of all the hosts. It provides coordination and maintains the synchronization between the multiple servers.

Let’s discuss how to maintain a counter for distributed hosts using Zookeeper.

- From 3.5 trillion combinations take 1st billion combinations.

- In Zookeeper maintain the range and divide the 1st billion into 1000 ranges of 1 million each i.e. range 1->(1 – 1,000,000), range 2->(1,000,001 – 2,000,000)…. range 1000->(999,000,001 – 1,000,000,000)

- When servers will be added these servers will ask for the unused range from Zookeepers. Suppose the W1 server is assigned range 1, now W1 will generate the tiny URL incrementing the counter and using the encoding technique. Every time it will be a unique number so there is no possibility of collision and also there is no need to keep checking the DB to ensure that if the URL already exists or not. We can directly insert the mapping of a long URL and short URL into the DB.

- In the worst case, if one of the servers goes down then we will only lose a million combinations in Zookeeper (which will be unused and we can’t reuse it as well) but since we have 3.5 trillion combinations we should not worry about losing this combination.

- If one of the servers will reach its maximum range or limit then again it can take a new fresh range from Zookeeper.

- The Addition of a new server is also easy. Zookeeper will assign an unused counter range to this new server.

- We will take the 2nd billion when the 1st billion is exhausted to continue the process.

Comments

Post a Comment